Float Precision or Single Precision in Programming

Dec 16, 2024 · Float Precision, also known as Single Precision refers to the way in which floating-point numbers, or floats, are represented and the degree of accuracy they maintain.

Complete Guide to Floating Point Representation: IEEE 754

Aug 18, 2025 · This guide explores how computers store decimal numbers using floating-point representation, why we sometimes get unexpected results like 0.1 + 0.2 ≠ 0.3, and how …

Single-precision floating-point format - Wikipedia

Single-precision floating-point format (sometimes called FP32, float32, or float) is a computer number format, usually occupying 32 bits in computer memory; it represents a wide range of …

c++ - Understanding floating point precision - Stack Overflow

Aug 21, 2012 · Floating point numbers are basically stored in scientific notation. As long as they are normalized, they consistently have the same number of significant figures, no matter …

Floating-Point Precision in Programming - DEV Community

Jan 28, 2025 · By understanding how floating-point numbers work and using these techniques, you can avoid unexpected behavior in your code and write programs that handle numbers with …

C++ Floating Point Precision Explained Simply

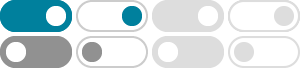

C++ floating point precision refers to the accuracy and range of representation of decimal numbers, which can be affected by the choice of data type (float, double, or long double) and …

Floating Point Precision in Python: A Comprehensive Guide

Apr 9, 2025 · However, working with floating point numbers comes with a crucial aspect - precision. Understanding floating point precision is essential for writing accurate and reliable …

Floating Point Precision Issues Explained How to Handle

Jul 25, 2025 · Explore why floating-point numbers produce imprecise decimal results and discover practical solutions and alternative methods for handling these common calculation errors.

Floating Point Representation - GeeksforGeeks

Oct 8, 2025 · Floating-point representation lets computers work with very large or very small real numbers using scientific notation. IEEE 754 defines this format using three parts: sign, …

Understanding Floating-Point Arithmetic: Precision, …

Jul 5, 2024 · Most hardware and programming languages use the IEEE 754 standard for floating-point numbers. The common formats are single precision (32 bits) and double precision (64 bits).